14.3 Managing statistical data and metadata#

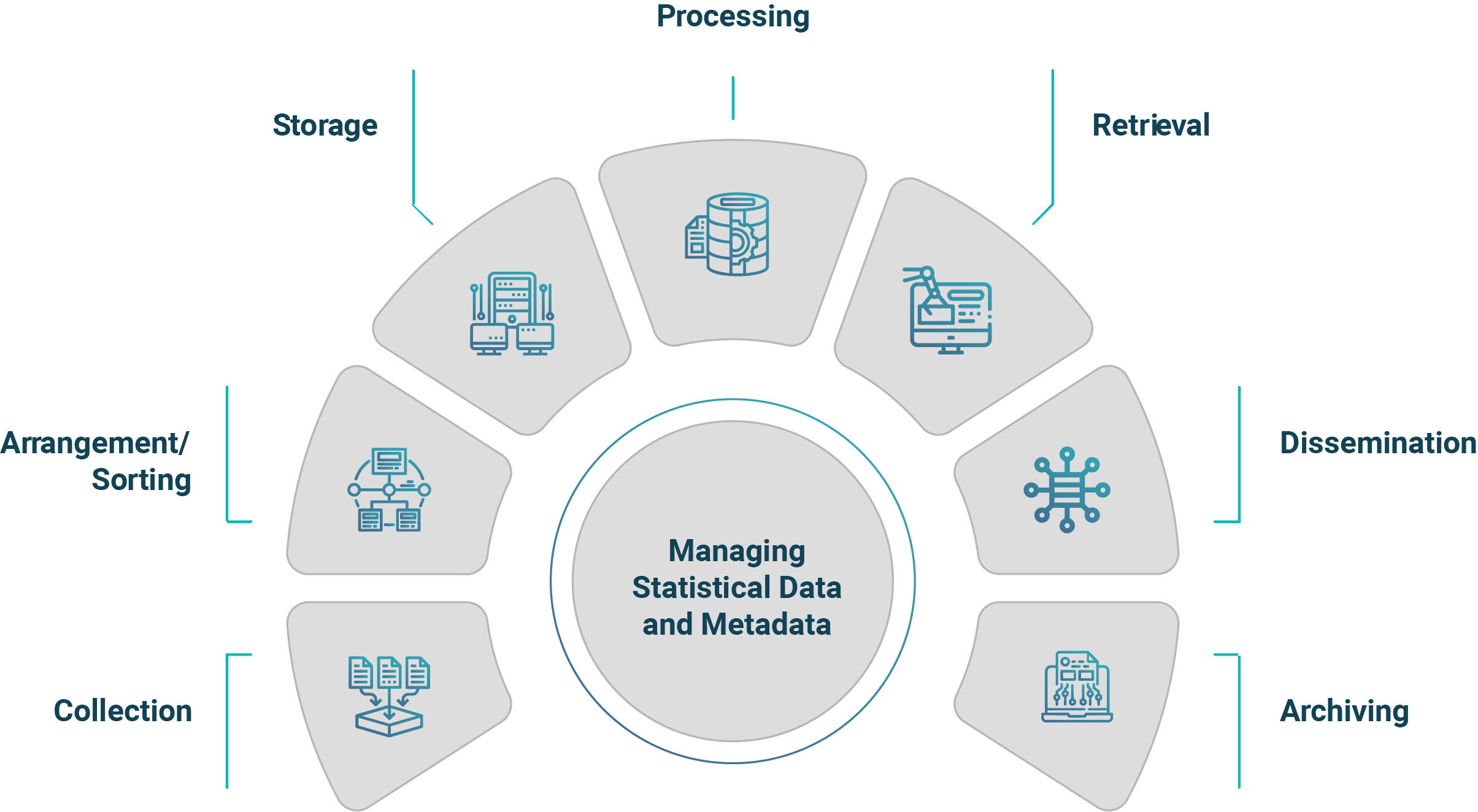

Data and metadata management involves the management of documents and records, archiving, managing knowledge, standards and access rights as well as metadata and data management. A data and metadata management policy should consider the entire statistical production process from data collection to dissemination and archiving as described below (derived from GAMSO):

Collection covers surveys and censuses (see Chapter 9.2 — Sample surveys and censuses) and administrative data. This is typically the most labour-intensive phase of the statistical process. The organizing of data collection operations will need to satisfy a number of strategic goals. The management of collection includes the effective planning and coordination of data collection across statistical domains, deciding what data are needed and what data items need to be collected directly from respondents. It should ensure the continuous improvement of efficiency of data management. It should work towards the effective reduction of response burden and costs for both respondents and the statistical authority and follow the principle of “collect once, use many times” to avoid duplicate requests being made to those providing data. It is fundamentally important to keep individuals’ and businesses’ information secure and confidential, regardless of whether it has been collected from a survey or non-survey sources.

Arrangement/sorting covers the organization of data into collections of data. It includes data modelling, which is the process of creating a data model for the data to be stored in a database. It should, where possible, use existing standards such as SDMX Data Structure Definitions (see Chapter 15.4.5 — Statistical Data and Metadata Exchange (SDMX)) for efficiency, reusability and interoperability.

Storage covers database management systems (DBMS), which are designed to define, manipulate, retrieve and manage data in a database. Such database systems vary according to the capacity of an NSO and can range from simple spreadsheet-based systems to highly sophisticated (hierarchical) database systems (see Chapter 15.6 — Basic IT infrastructure needs and skill requirements).

Processing covers the sub-processes of the GSBPM process phase which are: integrate data, classify and code, review and validate, edit and impute, derive new variables and units, calculate weights, calculate aggregates and finalise data files.

Retrieval covers the production and dissemination systems an NSO has in place to locate and retrieve data, information and knowledge.

Dissemination covers the systems an NSO has in place to disseminate data. This covers the GSBPM sub-processes update output systems, produce dissemination products, manage release of dissemination products, promote dissemination products, manage user support (see Chapter 11 - Dissemination of Official Statistics)

Archiving covers the systems to archive data and metadata, and in accordance with the rules and regulations removing data (both physical and digital) in line with relevant legislation.

Statistics Canada - Data collection planning and management (🔗).

Philippines Statistics Authority (OpenSTAT platform), a national data portal that now serves as the central database for publicly available information. (🔗).

Philippine Statistics Authority Statistical Survey Review and Clearance System (SSRCS). This mechanism aims to: ensure sound design for data collection; minimize the burden placed upon respondents; effect economy in statistical data collection efforts; eliminate unnecessary duplication of statistical data collected; and, achieve better coordination of government statistical activities.

UK Office of National Statistics - Data use and management policies (🔗).

14.3.1 Managing statistical data#

An NSO manages data from many sources including censuses, surveys, administrative data and, increasingly, new, non-traditional data sources often referred to as Big Data (see Chapter 9.5 — Big Data).

Common Statistical Data Architecture

Guidance for data management is provided by a reference architecture for data, such as the Common Statistical Data Architecture (CSDA). A data architecture is composed of models, policies, rules or standards that govern which data are collected, how they are stored, arranged, integrated, and used in data systems. Well-designed data architecture can result in improved timeliness and more disaggregated statistics at higher frequencies.

The CSDA can support an NSO in the design, integration, production and dissemination of official statistics. It acts as a reference template for an NSO in the development of data architecture (see Chapter 15.2.13 — Common Statistical Data Architecture) that can guide IT staff in the development of systems to be used by statisticians in the production of statistical products. The CSDA shows how an NSO can organize and structure its processes and systems for efficient and effective management of data and metadata in order to help in the modernization and the improved efficiency of statistical production processes. It can help an NSO manage the newer types of data sources such as Big Data, scanner data, citizen-generated data and web scraping.

The CSDA covers the following:

How information is managed as an asset throughout its lifecycle.

Accessibility of information.

Describing data to enable reuse.

Capturing and recording information at the point of creation/receipt.

Using an authoritative source.

Using agreed models and standards.

Information security.

CSDA is focused on capabilities related to data and metadata. These include data input; data transformation; data integration and provisioning; metadata management; data governance; provenance and lineage; and security. CSDA is a “data-centric” view of an NSO’s architecture, putting emphasis on the value of data and metadata, the need to treat data as an asset and to focus on the way an NSO could treat their data and metadata.

The Modernisation Maturity Model

The companion to CSDA is the Modernisation Maturity Model (MMM). The MMM and its roadmap focus on how to build organizational capabilities through the implementation of the models and standards identified as key to statistical modernisation, such as the Generic Statistical Business Process Model (GSBPM), and its extension the Generic Activity Model for Statistical Organizations (GAMSO), the Generic Statistical Information Model (GSIM) and the Common Statistical Production Architecture (CSPA). See Chapter 15.4 — Use of standards and generic models in an NSO.

The MMM is a self-evaluation tool to assess the level of organizational maturity against a set of pre-defined criteria. There are multiple aspects of maturity in the context of modernisation, and there are several distinct dimensions. Within each of these dimensions, an NSO may have different levels of maturity. A maturity self-assessment should be carried out by a cross-cutting group involving members of the corporate planning, statistical production, information, methodology, applications and technology functions in order to ensure a comprehensive review.

The MMM allows statistical organizations to evaluate their current level of maturity against a standard framework. This assessment will provide a clear picture of the organizational maturity level, which can then be compared between organizations, and between statistical domains/business units within an organization.

The Modernisation Maturity Roadmap

The MMM has an associated roadmap, the Modernisation Maturity Roadmap (MMR), that provides clear guidelines on the steps to take to reach higher levels of organizational maturity more quickly and efficiently. The roadmap includes supporting instruments to help statistical organizations, at different maturity levels, to implement the different standards. The MMM and the roadmap can help an NSO regardless of its capacity level. They acknowledge that there can be different maturity levels depending on the statistical domain or part of the NSO. The roadmap addresses the needs of an NSO, particularly those in the earlier stages of modernisation, to have clearer information about how to progress in the most efficient way.

14.3.2 Managing statistical metadata#

Principle 3 of the United Nations Fundamental Principles of Official Statistics (UNFPOS) states that to facilitate a correct interpretation of the data, the statistical agencies are to present information according to scientific standards on the sources, methods and procedures of the statistics. NSOs must be fully transparent for all users about their methods and provide comprehensive metadata linked to statistical data that is publicly accessible. Many NSOs have standard metadata templates that accompany each release of statistics.

An NSO must ensure that its users are properly informed regarding the location of data, how data were defined and compiled, the level of quality assigned to the data, and what related data can be used for comparison or to provide context. NSOs are obliged to describe accurately and openly the strengths and weaknesses of the data they publish and to explain how much inference the data can support. Thus, metadata provide information to enable the user to make an informed decision about whether the data are fit for the required purpose. Metadata management is an overarching GSBPM process. Some of the metadata serve as internal guidance on statistical production, and some support the user of statistics.

Statistical metadata consists of data and other documentation that describe data in a formalised way, and describe the processes and tools used in the production and usage of statistical data. Metadata describe the collection, processing and dissemination of data as well as relating directly to data themselves. All published or released statistics should be accompanied by metadata. Many NSOs have implemented metadata-driven systems that use metadata as input to configure processes so that a common process can serve despite the differences between statistical domains (and organizations). Using metadata as input parameters in this way can create highly configurable process flows. Good metadata management is essential for the efficient operation of statistical business processes. Metadata are present in every phase of a statistical production process and should be captured as early as possible and stored in each phase. Metadata management systems are vital and have the goal to make it easier for a user or application to locate specific data. This requires designing a metadata repository, populating the repository and making it easy to locate and use information in the repository. A number of data and metadata management systems are available to an NSO (see Chapter 11.4 — Metadata - providing information on the properties of statistical data).

The Common Metadata Framework (🔗) is a repository of knowledge and good practices related to statistical metadata and identifies the following principles for metadata management:

Metadata handling

Manage metadata with a focus on the overall statistical business process model.

Make metadata active to the greatest extent possible to ensure they are accurate and updated. Active metadata are metadata that drive other processes and actions.

Reuse metadata where possible for statistical integration as well as efficiency.

Preserve history of metadata by preserving old versions.

Metadata authority

Ensure the registration process and workflow associated with each metadata element is well documented, so there is clear identification of ownership, approval status, date of operation, and so forth.

Single source: Ensure that a single, authoritative source exists for each metadata element.

One entry/update: Minimize errors by entering once and updating in one place.

Standards variations: Ensure that variations from standards are tightly managed, approved, documented, and visible.

Relationship to statistical cycle/processes

Make metadata-related work an integral part of business processes across the organization.

Ensure that metadata presented to end-users matches the metadata that drove the business process or was created during the process.

Describe metadata flow with the statistical and business processes, data flow, and business logic.

Capture metadata at their source, preferably automatically as a by-product of other processes.

Exchange metadata and use them for informing both computer-based processes and human interpretation. The infrastructure for the exchange of data and associated metadata should be based on loosely coupled components, with a choice of standard exchange languages, such as XML.

Users

Ensure that users are clearly identified for all metadata processes and that all metadata captured will create value for them.

Metadata is diverse. Different views correspond to different uses of the data; users require different levels of detail; and, metadata appear in different formats depending on the processes and goals for which they are produced and used.

Ensure that metadata are readily available and useable in the context of the external or internal users’ information needs.

Ensure feedback is collected from the knowledge management process and technology user.

14.3.3 The Statistical metadata system#

Statistical metadata systems play a fundamental role in NSOs. Such systems comprise the people, processes and technology used to manage statistical metadata. Statistical metadata systems generate and manage metadata. It is an integral part of an NSO and is cross-cutting by nature and needs the involvement of managers, subject-matter statisticians, methodologists, information technology experts, researchers, respondents and end-users. Their needs and obligations vary according to whether they participate in the system as metadata users, metadata suppliers, designers, developers, producers, administrators and/or evaluators.

As noted in the UNECE publication ‘Statistical Metadata in a Corporate Context: A guide for managers’ (🔗) the statistical metadata management system can effectively support the following functions:

Planning, designing, implementing and evaluating statistical production processes.

Managing, unifying and standardising workflows and processes, and making workflows more transparent by sharing work instructions.

Documenting data collection, storage, evaluation and dissemination.

Managing methodological activities, standardizing and documenting concept definitions and classifications.

Managing communication with end-users of statistical outputs and gathering of user feedback.

Improving the quality of statistical data, in particular consistency, and transparency of methodologies.

Managing statistical data sources and cooperation with respondents.

Improving discovery and exchange of data between the NSO and its users.

Improving the integration of statistical information systems with other national information systems. Growing demands to use administrative data for statistical purposes require better integration and sharing of metadata between statistical and administrative bodies, to ensure coherence and consistency of exchanged information.

Disseminating statistical information to end-users. End-users need reliable metadata for searching, navigation, and interpretation of data. Metadata should also be available to assist post-processing of statistical data.

Improving integration between national and international organizations. International organizations are increasingly requiring integration of their own metadata with metadata of national statistical organizations in order to make statistical information more comparable and compatible, and to monitor the use of agreed standards.

Developing a knowledge base on the processes of statistical information systems, to share knowledge among staff and to minimize the risks related to knowledge loss when staff leave or change functions.

Improving the administration of statistical information systems, including administration of responsibilities, compliance with legislation, performance and user satisfaction.

Facilitating the evaluation of costs and revenues for the statistical organization.

Unifying statistical terminology as a vehicle for better communication and understanding between managers, designers, subject-matter statisticians, methodologists, respondents and users of statistical information systems.

The OECD data and metadata reporting and presentation handbook (🔗).

Metadata Management - GSBPM and GAMSO (🔗).

UNECE Terminology on Statistical Metadata (🔗).

Metadata Concepts, Standards, Model and Registries (🔗).

A basic framework for the role of the SMS in statistical organizations as defined in the UNFPOS(🔗).

Core principles for metadata management, UNECE (🔗).

UNECE Common Metadata Framework – A guide for managers (🔗).

(Eurostat)publishes a large amount of metadata. This approach follows principle 15 of the European statistics code of practice (🔗), which states that data should always be made available with supporting metadata.

UNECE Statistical Metadata in a Corporate Context: A guide for managers (🔗).

Philippine Statistics Authority – Inventory of Statistical Standards in the Philippines (ISSiP).